- CUDA allows acceleration IA and scientific computing on GPUs NVIDIA with optimized libraries and tools.

- WSL2 in Windows 11/10 (21H2+) supports CUDA with drivers suitable and Docker with GPU.

- En Linux, align NVIDIA driver and Toolkit version (e.g., 560 + CUDA 12.6) and set PATH/LD_LIBRARY_PATH.

- Supports PyTorch and containers; Miniconda and tweaks like swap improve stability and flow.

If you work with AI, data science, or simulations, installing NVIDIA CUDA is one of those steps that makes all the difference. GPU acceleration It multiplies performance and today I explain, in detail and without beating around the bush, how to set it up on both Windows (including WSL) and native Linux.

You'll see everything from prerequisites and pre-checks to driver installation, the CUDA Toolkit, testing with PyTorch and Docker, and a bonus feature on Miniconda, swap, and server settings. The guide brings together best practices and correct common pitfalls so you don't waste time on the obvious.

What is CUDA and why you might care

CUDA is NVIDIA's ecosystem for parallel computing that lets you use your GPU for much more than graphics. It includes compiler, libraries and tools that accelerate workloads such as deep learning, scientific analysis, or simulations.

Versions like 11.8 or 12.6 come with performance improvements, support for hardware reciente, fine-tuned memory management, and highly polished integration with frameworks like TensorFlow and PyTorch. The result: increased speed and stability for demanding projects.

In addition to high-level libraries, the CUDA runtime orchestrates the execution of kernels, manages memory on the GPU and makes it easy for your applications to scale using thousands of cores in parallel. For modern AI workloads, it's literally the foundation.

If you develop on Windows, a key advantage is that WSL2 allows the use of CUDA within Linux distributions like Ubuntu or Debian, almost natively, with support for Docker and NVIDIA Container Toolkit. It's a very powerful option for mixed environments.

Requirements and compatibility

Before installing anything, make sure your hardware and system meet the requirements. You need an NVIDIA GPU with Compute Capability 3.0 or higher for CUDA 11.8 and modern support for newer versions like 12.6.

On Linux you can check for GPU presence with: lspci | grep -i nvidiaIf you want to identify the recommended driver on Ubuntu, try: ubuntu-drivers devices. On Windows, take a look at the Device administrator in 'Display adapters'.

As for systems, CUDA 11.8 has been widely used in Windows 10/11 64bit and distributions like Ubuntu 18.04 and 20.04, as well as RHEL/CentOS 7/8. For WSL, Windows 11 and Windows 10 version 21H2 or later support GPU compute with CUDA within the Linux subsystem.

For WSL2, make sure you have an updated kernel. 5.10.43.3 or later is required. You can check the version from PowerShell with: wsl cat /proc/version. Also, keep Windows Update up to date (Settings > Windows Update > Check for updates).

Regarding memory, for training tasks it is convenient to have at least 8 GB of RAM in the system and 4GB of VRAM on the GPU, although many models will appreciate considerably more. An x86_64 CPU and a modern C++ compiler (C++14 or higher) round out the list.

Installation on Windows 11/10 with WSL2

The correct sequence avoids headaches: first enable WSL and install Linux, then the NVIDIA drivers for Windows, and within WSL, the CUDA Toolkit plus your AI libraries. Optionally, add Docker Desktop at the end.

1) Enable WSL from PowerShell with administrator permissions: activates the feature and restart when asked.

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Windows-Subsystem-Linux

2) Update the WSL kernel to the latest version from PowerShell: wsl-update. Then install your favorite distribution (for example, Ubuntu 22.04 LTS):

wsl --install -d Ubuntu-22.04

3) Open the newly installed Ubuntu application, update indexes and packages, and install basic utilities. Keep the system up to date reduces subsequent failures.

sudo apt update && sudo apt -y upgrade

4) Inside Ubuntu (WSL), install Python pip and the CUDA Toolkit from the official repos. GPU drivers are not installed in WSL in the Linux kernel, those go in Windows.

sudo apt -y install python3-pip nvidia-cuda-toolkit

5) Add the path to your user scripts to your PATH to avoid problems running tools installed with pip. This speeds up the use of utilities without absolute routes.

nano ~/.bashrc

# Al final añade, adaptando 'usergpu' a tu usuario

export PATH=/home/usergpu/.local/bin${PATH:+:${PATH}}

# Guarda (Ctrl+O), sal (Ctrl+X) y recarga

source ~/.bashrc

6) Install the NVIDIA driver for WSL On Windows, download the CUDA-enabled driver from the NVIDIA/Microsoft website (GPU compute driver for WSL). After installing, restart Windows if necessary.

7) Check kernel version in WSL and that everything responds: wsl cat /proc/versionIf you're on 5.10.43.3 or later, that's great. Keep Windows Update up to date to receive WSL2 improvements.

8) Install PyTorch with CUDA support inside Ubuntu (WSL). The cu118 index is a widely used, stable option. This is how you take advantage of the GPU in your notebooks or scripts.

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

9) Quick test in Python: Load PyTorch and check if CUDA is available and how many GPUs it detects. This confirms that the stack works..

python3

>>> import torch

>>> torch.cuda.device_count()

# Ejemplo de salida: 1 (o más, según tu equipo)

>>> torch.cuda.is_available()

# Esperado: True

10) Docker with GPU on WSL: Install Docker Desktop on Windows, activate it, and run tests from Ubuntu WSL. NVIDIA Container Toolkit It is supported in WSL for Linux-like scenarios.

docker run --rm -it --gpus=all nvcr.io/nvidia/k8s/cuda-sample:nbody \

nbody -gpu -benchmark -numdevices=1

If you see the 'only 0 devices available' error with multiple GPUs, a known remedy is to disable and re-enable each GPU in the Windows Device Manager (Display Adapters > Action > Disable and then Enable). Then, repeat the test.

Native installation on Linux (Ubuntu/Mint and derivatives)

On native Linux, the recommended path is: install the official NVIDIA driver, then the CUDA Toolkit, and finally, configure environment variables. In recent versions, the 560 branch of the driver and CUDA 12.6 work very well.

1) Add the graphics drivers PPA (Ubuntu) and install the 560 driver. Reboot for the kernel to load the updated module.

sudo add-apt-repository ppa:graphics-drivers/ppa

sudo apt update

sudo apt install -y nvidia-driver-560

# Reinicia el equipo

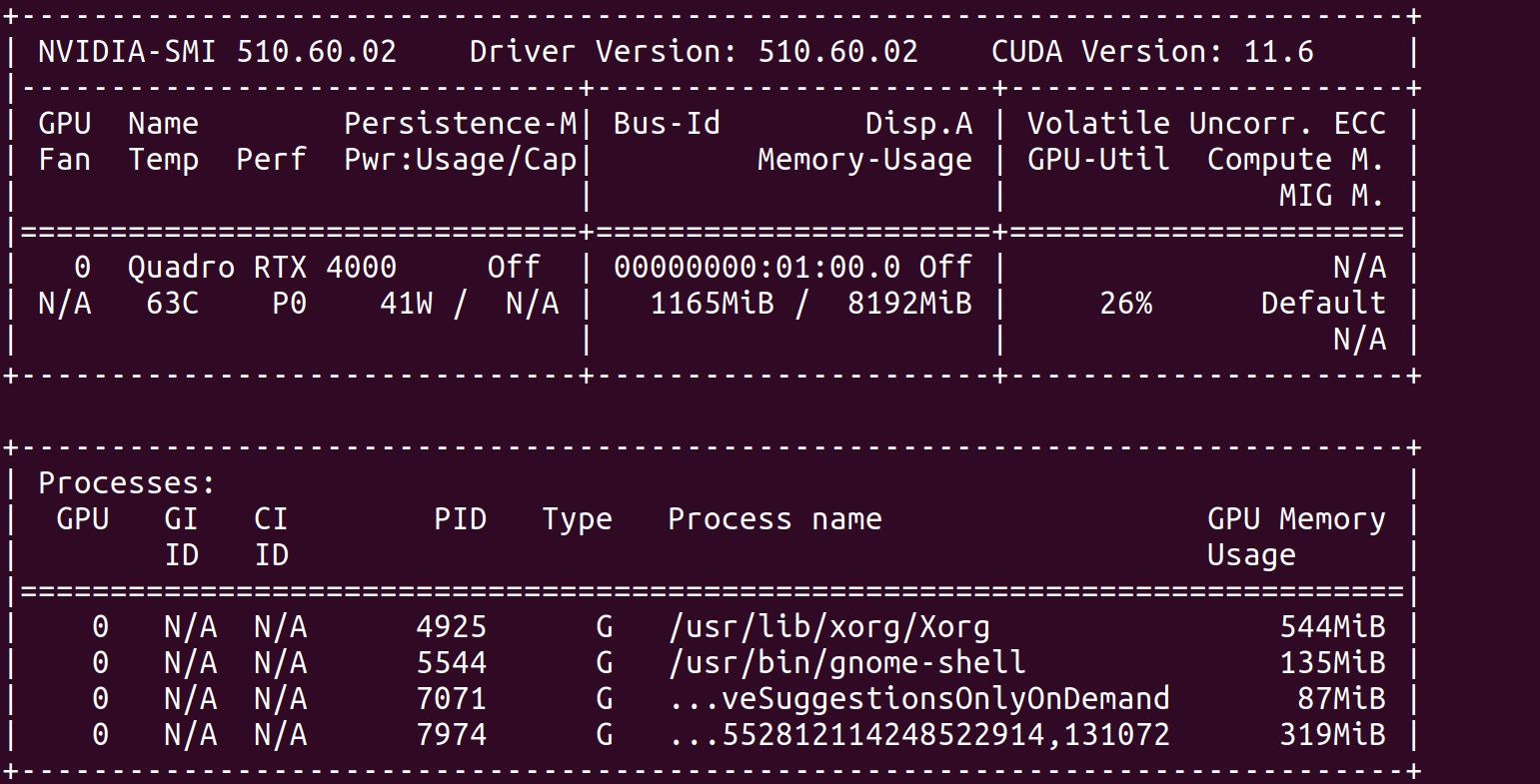

2) Verify with nvidia-smi that the driver is operational. It should show version, GPU and memory before continuing with CUDA.

nvidia-smi

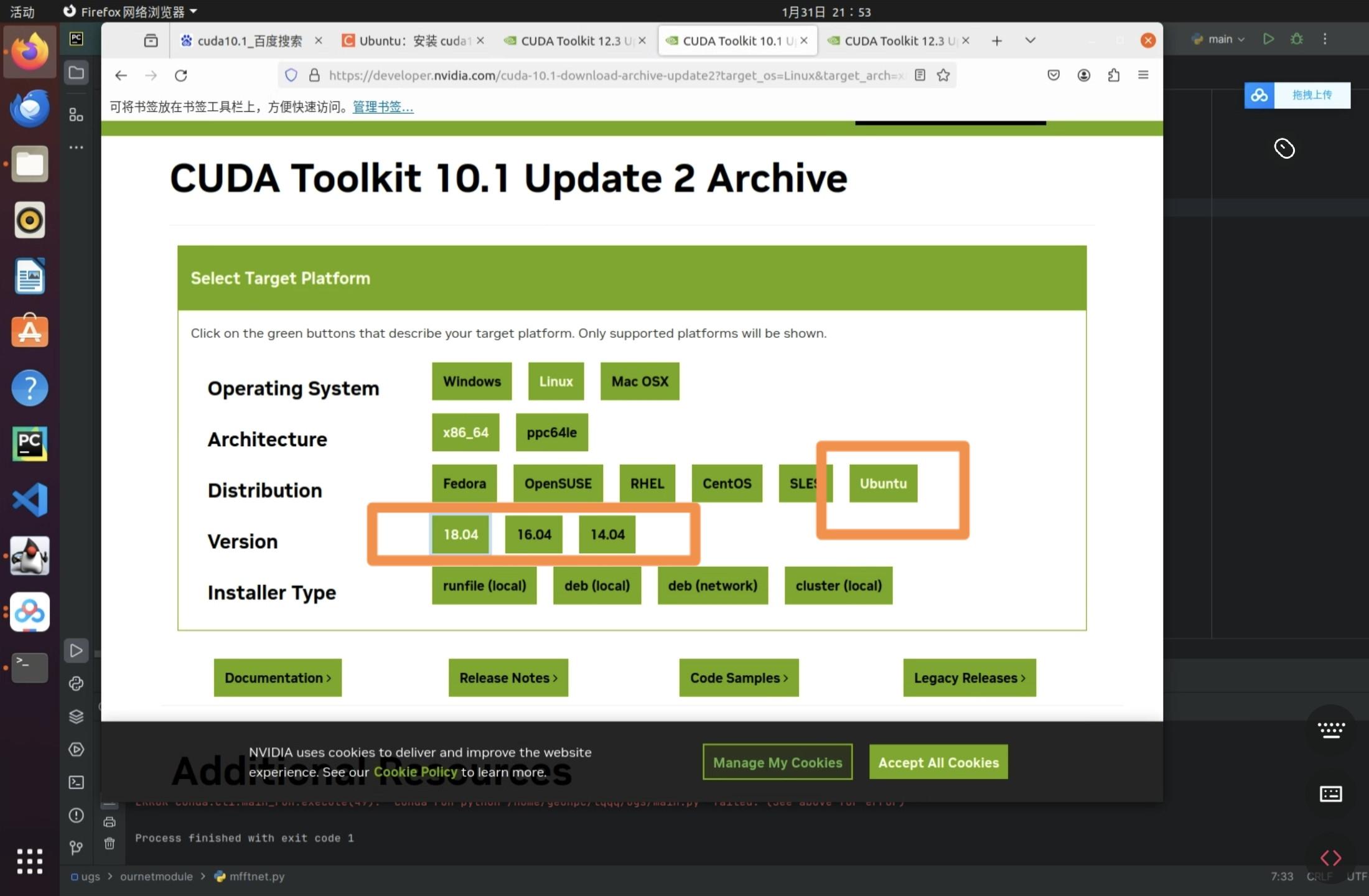

3) Install build dependencies and download the CUDA 12.6 installer (example with runfile). In the wizard, uncheck the drivers box if you already installed 560 to avoid conflicts.

sudo apt install -y build-essential

wget https://developer.download.nvidia.com/compute/cuda/12.6.3/local_installers/cuda_12.6.3_560.35.05_linux.run

sudo sh cuda_12.6.3_560.35.05_linux.run

4) Export PATH and LD_LIBRARY_PATH to the installed version, and reload the profile. This ensures that nvcc and the libs are available in the session.

nano ~/.bashrc

# Añade al final (ajusta si tu ruta difiere)

export PATH=/usr/local/cuda-12.6/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-12.6/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

# Guarda y recarga

source ~/.bashrc

5) Check the CUDA compiler version. If 'nvcc –version' responds correctly, the installation is ready for production. Without this step, many builds will fail..

nvcc --version

Additional notes for other versions: If you install CUDA 11.8 With runfile, the process is similar and the path is usually /usr/local/cuda-11.X. In any case, check the driver compatibility matrix to properly match the CUDA version and driver.

Miniconda, PyTorch and ecosystem

Managing environments with Miniconda is extremely convenient: it isolates dependencies, simplifies builds, and lets you switch between Python versions. Ideal for AI and multi-project workflows.

Install Miniconde on Linux with these steps, accept the license and reboot the terminal at the end. After that, you will see the active base environment.

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

bash ./Miniconda3-latest-Linux-x86_64.sh

# Enter > Avanzar > yes > enter > yes

For some graphics packages it may be necessary python3-tk on Ubuntu/Mint systems. It's also useful to have git handy.

sudo apt update -y && sudo apt install -y python3-tk git

With the environment ready, install PyTorch with CUDA stable (cu118) or even nightly if you are looking for the latest in performance (e.g. cu124). Choose according to your needs of stability vs. novelties.

# Estable (ejemplo cu118)

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

# Nightly (ejemplo cu124)

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu124

If you're working with creative pipelines like ComfyUI, you can clone their repo and launch the app. To access it from another computer on the network, use the –listen 0.0.0.0 flag. It is very practical on headless servers.

git clone https://github.com/comfyanonymous/ComfyUI

cd ComfyUI

pip install -r requirements.txt

python main.py --listen 0.0.0.0

Testing and validation (PyTorch and Docker)

Validating your installation saves you from surprises later. In Python, verify that PyTorch sees the GPU, can allocate memory, and that kernels launch without errors. The two key calls They are is_available and device_count.

python3

>>> import torch

>>> torch.cuda.is_available()

True

>>> torch.cuda.device_count()

1

Using Docker, try the NVIDIA 'nbody' sample container. If it works with –gpus=all, you have the container runtime properly configured and GPU visibility from containers.

docker run --rm -it --gpus=all nvcr.io/nvidia/k8s/cuda-sample:nbody \

nbody -gpu -benchmark -numdevices=1

On Windows+WSL, if you get the '0 devices' error, remember the trick of disabling/enabling GPUs in Device Manager. It is a known issue which sometimes occurs on computers with multiple cards.

Finally, don't forget to validate the CUDA compiler with nvcc -V and verify the driver installation with nvidia-smi. Both commands must run without errors.

Useful tweaks in Linux: swap and server mode

To avoid crashes due to memory shortages during intensive workouts, expanding the swap file can be a lifesaver. A size of 32 GB usually gives margin in large models.

sudo swapon --show

sudo swapoff -a

sudo dd if=/dev/zero of=/swapfile bs=1M count=32768 status=progress

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

sudo swapon --show

sudo nano /etc/fstab

# Añade si no existe:

/swapfile none swap sw 0 0

If you're using Linux Mint (and similar ones on others), you can boot into multi-user mode without a graphical environment to save resources, including some VRAM. Perfect for servers or training nodes.

sudo nano /etc/default/grub

# Cambia "quiet splash" por "text"

sudo update-grub

sudo systemctl set-default multi-user.target

# Reinicia; para volver al escritorio en sesión:

startx

Common problems and how to fix them

Misaligned Drivers and Toolkit: If you install a incompatible driver With your version of CUDA, you may encounter errors when compiling or launching kernels. Consult the NVIDIA compatibility table and align versions.

Dependency conflicts on Linux: remove residual packages from previous installations, and use apt or yum to solve exact versions when the installer asks you to. Following the official guide exactly helps a lot.

WSL without proper kernel: check wsl-update and check the version with 'wsl cat /proc/version'. Windows Update must be up to date to receive subsystem improvements.

Docker without GPU: Make sure you have it installed NVIDIA Container Toolkit and Docker Desktop is active on Windows. Try the nbody container and check user permissions if anything fails.

PATH and libraries: If nvcc or the CUDA libraries are not found, check the variables PATH and LD_LIBRARY_PATH. Return to 'source ~/.bashrc' after editing and, if necessary, restart the session.

Cloud alternative: ready-to-use GPU instances

If you can't or don't want to set up locally, a cloud instance with GPUs takes the work off your hands. Services with A100, RTX 4090 or A6000 offer raw power and AI-ready templates.

On platforms that provide preconfigured pods, you can launch your environment in minutes, reduce costs with usage-based billing, and choose images optimized for PyTorch. For teams that rotate models and they need to scale, it is a very efficient way.

With all of the above, you now have the complete map: requirements, installation on Windows with WSL and on native Linux, key validations with PyTorch and Docker, plus productivity extras with Miniconda, swap, and server mode. The goal is for you to go from zero to training no jams, with a solid and easy-to-maintain stack.

Passionate writer about the world of bytes and technology in general. I love sharing my knowledge through writing, and that's what I'll do on this blog, show you all the most interesting things about gadgets, software, hardware, tech trends, and more. My goal is to help you navigate the digital world in a simple and entertaining way.