- Practical ways to import MQTT data into Excel: scripts in PythonExporting with MQTT Explorer and data flows in Azure IoT.

- Full pipeline control with sources, transformations, and destinations, including persistence and schemas.

- Export to CSV or JSON and pipe to storage in the cloud without losing messages or structure.

- It solves typical export issues: formats, incomplete data, and large volumes.

If you work with IoT devices and need to transfer telemetry data to a spreadsheet, you'll naturally wonder how to reliably transform MQTT messages into an Excel file. The good news is that there are several solid paths: from lightweight Python scripts to graphical tools and industrial pipelines in the cloud.Here I explain, in detail and without beating around the bush, what options you have and when each one is appropriate.

Regardless of your experience - even if you're just starting out - you can choose solutions ranging from "plug and export" to architectures with sources, transformations, and destinations. I'll show you practical methods (Python and MQTT Explorer) and also how to leverage Azure IoT data flows when the project scales., without forgetting Trickscommon problems and how to solve them.

Why import MQTT data into Excel, and what's the best fit in each case?

In personal or laboratory settings, a script Simple is enough; for testing and debugging, a GUI like MQTT Explorer gets you out of trouble in seconds; and if your company needs stable flows with filtering, enrichment, and guaranteed delivery, Then a managed data flow is advisable, for example with Azure IoT Operations..

Option 1: Python script that dumps MQTT to Excel (fast and controlled)

A lightweight and effective solution consists of a small Python program that connects to the broker, subscribes to one or more topics, and saves messages to an Excel sheet. This approach is perfect when you want portability, zero interface dependencies, and fine-grained control over what, how, and how much is saved, similar to techniques for export script results.

There is a public example called mqtt2excel that illustrates this pattern: you choose the broker, the Excel file name, and how much you want to store. If you do not specify a quantity, the script will capture a limited number of messages (for example, the first 10 that arrive).If you are subscribed to two topics and receive nine messages from the first and one from the second, those will be the ten records that end up on the spreadsheet.

Before using it, you need Python installed and two libraries: one for writing Excel and one for the MQTT client. The typical combination is xlwt (for XLS) along with the Paho MQTT libraryExample installation:

pip install xlwt

pip install paho-mqttOnce you have the dependencies, you adjust the example with your broker, the file name, and the themes. You can specify a record limit so that the program stops automatically when it completes the captureA pseudo-example:

from MQTT2Excel import MQTT2Excel

broker = "BROKER_O_DIRECCION"

salida = "lecturas-demo.xls"

m2e = MQTT2Excel(broker, salida)

m2e.setRecordsNumber(50) # total de mensajes combinados de todos los temas

m2e.addTopics("TOPICO1")

# Si necesitas más temas:

# m2e.addTopics("TOPICO2")

m2e.start()When you run it, it will dump each message with its timestamp. When it reaches the specified number of records, it stops and leaves you with the file ready to open in Excel.If your broker requires a username/password or TLS, you can easily extend this by adding a few lines.

Reference repository: github.com/gsampallo/mqtt2excel. Although it's just an example, it lays a great foundation for customizing it to your specific situation..

Option 2: Export with MQTT Explorer to CSV or JSON (ideal for debugging and sharing)

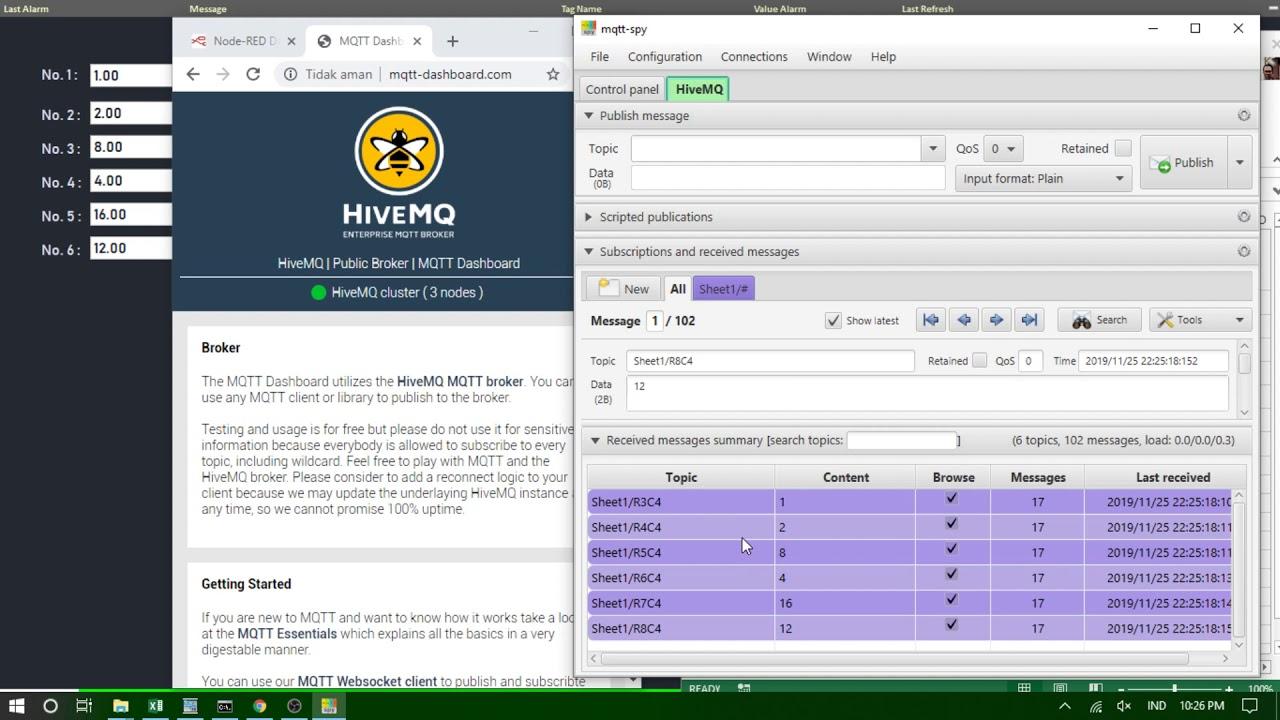

MQTT Explorer is a very useful graphical application for visualizing, testing, and understanding your MQTT messages. It allows you to connect to brokers, explore the topic tree, publish evidence, and filter data with a simple interface.For Excel, the interesting thing is that it exports the received data to standard formats such as CSV or JSON.

Key features to consider: connection management, topic exploration and subscription, publishing, structured payload view, and filters/searches. In practice, it's a godsend for cleaning up and taking "snapshots" of data that you can then open in Excel or your favorite analysis tool..

Typical steps to export data with MQTT Explorer: connect to the broker, move to the topic you are interested in, choose the export option and select the format (CSV or JSON). You can apply filters by time range, topic, or content before exporting, saving you cleanup later..

What is this export typically used for? For offline analysis, historical archiving, integration into other tools, or sharing with colleagues. An exported CSV opens in Excel with one click, and a JSON fits you into pipelines or databases effortless.

Common use cases when exporting MQTT

The most common reasons for extracting messages to external files are usually the same: offline analysis, archiving, integration with other systems, sharing with third parties, debugging, and documentation. It is also a fast track for data migrations between platforms and pre-maintenance backups..

Concrete examples: creating Excel reports with graphs and statistics, storing historical series for audits, loading JSON into a data lake or your database, or sending a CSV to someone who does not have access to the broker. During testing, exporting a sample often helps to detect outliers and verify that you are publishing and receiving the correct data..

Option 3: Data flows in Azure IoT Operations (when the project grows)

If your organization needs something more robust than a script or an ad hoc export, you can orchestrate managed data flows in Azure IoT Operations. The idea is clear: define a pipeline with a source, optional transformations, and one or more destinations.All of this is managed with reusable profiles and connection points.

First of all, it is advisable to have an Azure IoT Operations instance and, if necessary, define separate data flow profiles to distribute the load (avoiding concentrating too many flows in one). In addition to profiles, you'll need endpoints for sources and destinations: local MQTT, Kafka, Event Hubs, OpenTelemetry, or storage such as Data Lake or Fabric OneLake..

Creating or updating a flow can be done via the CLI, Bicep, or Kubernetes manifests. For example, with the Azure CLI, you apply a JSON file with the flow properties, specifying the mode, operations, and source, transformation, and destination settings. This flexibility allows you to version the configuration and automate deployments.

Origins: MQTT or Kafka, theme filters, and shared subscriptions

A source is defined by a reference to a connection point and a list of "dataSources". In MQTT you can use topic filters with wildcards (+ and #), while in Kafka you must statically list each topic. It's practical because you can reuse the same endpoint in multiple flows, only changing the topics to listen to..

Regarding shared subscriptions with MQTT message brokers: if the flow profile has multiple instances, the $shared prefix is automatically activated and the load is distributed among consumers. You don't need to add $shared manually unless you want to force it, and in general it's better to let the platform handle it..

If you use a "resource" as the source, the schema is inferred from its definition and the data still comes in through the local MQTT broker underneath. Keep in mind the operational rule: if the MQTT agent's default endpoint is not used as the source, it must be used as the destination..

Regarding the "source schema", it is currently used to display data points in the interface, but not to validate or deserialize input messages. It's useful for UI clarity, although effective typing is done by serializing if you configure it that way..

Transformations: enrich, filter, map, and create new properties

Transformations are optional; if you don't need to modify anything, you can omit them. When used, they follow clear phases: first you enrich, then you filter, and finally you map/rename/create new properties. Enrichment means cross-referencing with a reference dataset hosted in the platform's state (for example, adding location and manufacturer based on an identifier).

During filtering, you apply conditions to the source fields to allow only what you are interested in. In mapping, you can move fields, convert types, or calculate derivatives (for example, temperature in Celsius), and also rename data points or add new properties for later steps..

If you want to serialize with guarantees before sending to the destination, you define a serialization scheme and format. For storage solutions like Microsoft Fabric or Azure Data Lake, Parquet or Delta with an explicit schema is recommended for consistency and efficient querying..

Practical detail: You can access MQTT metadata in formulas, such as the topic or user properties, using specific syntax (for example, @$metadata.topic). This flexibility allows you to "route" and transform not only by payload, but also by header information.

Disk persistence to avoid losing messages upon reboot

When continuity matters, you can request disk persistence (MQTT v5) so that if the corridor or flow restarts, the state is recovered. This works when the flow uses the MQTT agent/broker as the source and the broker has persistence enabled with dynamic mode enabled for subscriber queues..

By enabling this option per flow, only the pipelines that need it consume persistence resources. This way you avoid losses due to power outages or restarts and maintain optimal performance in the rest of the system..

Destinations: from an MQTT theme to Data Lake, ADX, or local storage

The destination is configured with an endpoint and a list of "dataDestinations". In MQTT or Event Grid, it's a topic; in Kafka/Event Hubs, a topic/hub; in Data Lake, a container; in Azure Data Explorer, a table; and in local storage, a folder. This concept makes the same connection point reusable by multiple flows, only changing "where" each piece of data falls..

For MQTT, you also have "dynamic target topics" with variables like ${inputTopic} or ${inputTopic.index}, which allow you to build the output topic using segments of the input topic. Note: If a variable cannot be resolved (because the segment is missing), the message is discarded and a warning is logged; and wildcards are not allowed in destinations..

Example of flow logic and pipeline verification

Imagine a workflow that reads from an MQTT topic, enriches it with location data, converts a measurement to Fahrenheit, and filters out anomalous readings. Next, deliver to a dynamically constructed “factory/…” theme using the second segment of the input topicThis topology is suitable for industrial environments with multiple lines and sensors.

To verify that a flow works end-to-end, a proven approach is to set up a bidirectional MQTT bridge to a bus (e.g., Event Grid) and verify that messages arrive and respect scheme, filters, and mappings. Verification with synthetic messages plus a sample export makes it easier to validate format and fields.

Export configuration and automate deployments (CLI, Bicep, Kubernetes)

You can manage these flows from both the operations interface and infrastructure as code. With the CLI, you apply a JSON configuration; with Bicep, you define nested resources (profile, dataflow, endpoint references); and with Kubernetes, you describe a CRD with the operations specification. This declarative approach allows you to version changes and scale without fragile manual changes..

In Bicep, for example, you would reference the IoT Operations instance, the custom location, and the default profile, and then create the "dataflow" resource with Source, BuiltInTransformation, and Destination operations. The same applies to YAML in Kubernetes, pointing to the appropriate profileRef in the solution namespace.

Common problems when exporting and how to overcome them

When using tools like MQTT Explorer, the export option may not appear or may be inactive. Update to the latest version, confirm you are connected and have selected data or a specific theme before looking for the export button.

Another common mistake is exporting in the wrong format or with incomplete data. Check your export settings, active filters, and if you're handling large volumes, divide them into time windows or by topic to avoid crashes..

If the exported file does not open or appears corrupt, try opening it with another application, retry the export, and check the disk space. When an app freezes, restarting or reinstalling it usually "clears" temporary blocks..

In managed pipelines, it monitors the alignment between schema and format (Parquet/Delta) and the existence of the destination (table, container, folder). Target themes with unresolved variables cause message discards, so it's advisable to monitor warnings..

Good security and privacy practices on platforms

In corporate environments you will see screens for cookie consent and privacy settings, as is the case on professional sites where advertisements or job offers are displayed. Before accessing consoles or apps Embedded cookies accept or reject non-essential cookies according to your policy, and remember that you can always change your preferences later..

Some enterprise applications (e.g., technical catalogs or knowledge hubs) load complex interfaces that require JavaScript and authentication. If you see messages like “loading application…”, check permissions, enabled scripts, and your corporate network; it’s not a pipeline failure, but a web layer issue..

Industrial context: PLCs and real-time telemetry

In manufacturing, PLCs are the heart of process control; they are robust industrial computers that govern assembly lines, robots or any process that requires reliability and diagnosis. Many modern deployments bridge PLC→gateway→MQTT to expose measurements and events in a standardized way.

For analysts, being able to export a sample to Excel is key, whether for audits, quality control, or incident investigation. That's why the three levels coexist: ad hoc export for express analysis, repeatable scripts for maintenance shifts, and cloud pipelines for continuous exploitation..

Tips if you're starting out with your own IoT device

If you're building a device and need to transfer the data to a spreadsheet, start with the essentials: what broker do you use, what topics do you publish, and how often do messages arrive? With that information, choosing between script, GUI, or managed flow is much easier..

For an initial prototype, MQTT Explorer + CSV export will give you immediate results; if you want to automate regular data collection, a Python script is very useful; and if you need to scale with transformation, governance, and multiple destinations, seriously consider a data flow in Azure IoT. Furthermore, if you plan to analyze the data, learn how to Integrate Excel data with Power BI. At each stage, the key is to ensure format consistency and that no messages are lost on restarts..

This tour offers you three well-covered routes: The speed and control of Python, the convenience of exporting with MQTT Explorer, and the power of a pipeline in Azure IoT with versatile sources, transformations (enrich, filter, and map), persistence, and destinations.Whatever your starting point, you'll have your MQTT data in Excel or compatible formats without any headaches, and with options to grow as the project demands.

Passionate writer about the world of bytes and technology in general. I love sharing my knowledge through writing, and that's what I'll do on this blog, show you all the most interesting things about gadgets, software, hardware, tech trends, and more. My goal is to help you navigate the digital world in a simple and entertaining way.